The World Wide Web is a treasure for information dwellers, where a multitude of data in the form of information is available and spread across innumerable websites, platforms, and domains.

The one who is capable of harnessing this data efficiently can create a big difference in market research, leading to valuable insight.

Modern technology and problem-driven solutions play a paramount role in automating various data-related tasks.

Here, list crawlers come to the rescue and navigate users through the labyrinth of online data, exacting relevant statistics and organizing them into structured lists.

Discovering and organizing relevant data from this ocean available online can be a very daunting task. One must need a tool that empowers users to proficiently extract, analyze, and categorize useful data.

This modern tool aids users in capturing structured information, streamlining research, saving time, and unlocking hidden treasures by automating the process of extracting online data.

In this blog, we help you learn about list crawlers, discuss their features, types, pros and cons, and some facts that aid users across different domains.

We will dive deep into the technical and operational aspects, understanding its potential to navigate through complex web structures, handle effective content, and adapt to diverse list formats.

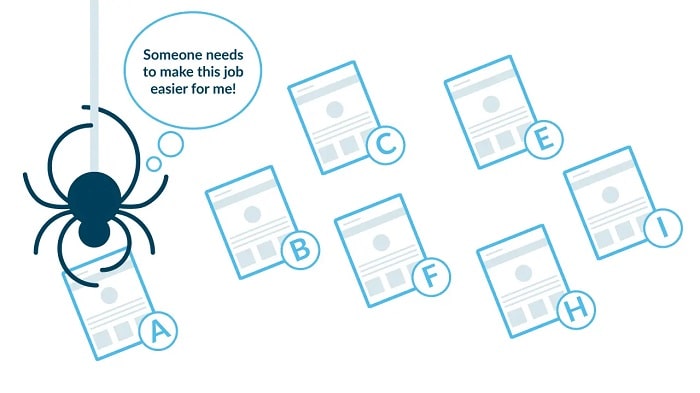

What are list crawlers?

The advancement of algorithms and artificial intelligence led to the invention of web crawlers, also known as web scrappers. These are designed to extract relevant data according to the requirements of users from a variety of websites.

The developers programmed these automated programs to assist human search and to access online platforms to get the required information.

Web scrappers’ use innovative web scraping and data analysis techniques to help users understand the vast amounts of sophisticated and versatile online content.

These indexers are very crucial and play a significant role in the development of online search engines like Google, Bing, Firefox, etc. They aid users in exploring the titles of web pages in a specific domain.

Then the next stage comes when this collected information, stored in the database, acts as the backbone for different searches and enables the display of desired results.

It is important to note that list crawlers focus on pages that contain hyperlinks. They do not list all the pages of a website randomly.

The crawlers do not include those pages that do not have any links, as well as sub-domains and external domains, in the crawling process.

Additionally, they determine the relevance of each file. In this process, the indexer excludes image files from the crawling process as they often contain data about their origin while presenting the required results.

The foremost aim of list crawlers is to streamline the search process by indexing different pages with and without links, which ameliorates the relevancy of search results.

To increase the relevancy of retrieved information, it makes sure that search results are as per the user’s requirements and focuses on increasing the effectiveness of the overall process.

Is the list crawler designed?

Yes, list crawlers are designed with great attention to work effectively and help users collect vital information from thousands of websites.

The process starts with indexing various pages, mostly located in the deep-down directory or any other identified point on the website.

Once they encounter it, they follow each link systematically and capture data from every page they go through.

After completion of the crawling process, these advanced crawler lists collected information in a user-friendly format.

This is the data that helps you gain valuable acumen in the fields where you need to improve or need information to acquire opportunities.

Apart from that, these smart indexers aid users in identifying dead ends within their websites—the links that lead to non-existent or inaccessible pages.

It also helps in the identification of duplicate content and empowers you with the right guidance to correct these issues for a smoother user experience for visitors on your website.

With the help of web crawlers, you not only optimize your platform for users but also eliminate dead ends, resolve duplicate content issues, and enhance the overall user experience.

This ultimately leads to satisfied visitors, which in turn attracts and retains a large audience for your website.

Types of list crawlers

To cater to clients’ specific needs, there are different types of crawlers listed below:

Basic crawlers are one of the simplest and most basic types of crawlers. They are used to collect data from a single website or web page.

Apart from that, if a user requires to scrape data from any of the static websites, then these kinds of indexers are really suitable.

- Advanced crawlers: If a user is required to navigate through several websites, follow links, and consolidate data from dynamic websites and web pages, then these crawlers are the ones that make these tasks hell easy by automating the intricate data collection process.

- E-commerce crawlers: They are very effective if businesses want to determine pricing strategies. Specially designed for the e-commerce industry and retailers, they collect vital information concerning product information, prices, and customer reviews after analyzing various e-commerce websites.

- Social media crawlers: In case the user wants to extract information regarding people’s sentiments on online platforms or track the latest trends in the market, these crawlers collect the required data from different social media platforms and consolidate it for analysis.

- Search Engine Crawlers: These indexers use various links and index contents to help various search engines, like Google, Bing, Microsoft Edge, etc., index web pages, making them more suitable for searches. They timely update search results for listings.

- Vertical Search crawlers: For fulfilling the requirements of specific industries, these crawlers search various niche-specific websites or directories and collect specific data according to demand, for example, real estate listings or job postings.

Why do you need list crawlers for your website?

To get an edge for your website and to increase visitors, accessibility, and the overall user experience, you must have list crawlers on your side. Here are some important reasons why you need them for better performance on your website:

1. Search Engine Optimization (SEO):

- The crawlers offer vital information to search engines that is necessary for your website to rank.

- They deep dive into different sources, such as page titles, meta descriptions, URLs, etc., to extract information.

- By indexing pages of your website, they ultimately enhance your website’s online visibility in search results and attract organic traffic.

Read More: Best SEO Companies for small business

2. Make your website discoverable.

- These indexers make your website accessible to users who are looking for specific keywords, increasing the chance of your website appearing in search results.

- To make a website discoverable and include it in search engine indexes, list crawlers analyze your content and arrange it in the required format.

3. Increase traffic on the website:

- Crawlers help you earn organic traffic on your website by efficiently crawling your page.

- If your website contains specific content regarding a specific domain, then whenever users search for relevant content, search engines lead them to your website.

4. Enhance the user experience:

- Intensify the user experience and aid search engines in delivering accurate and relevant search results.

- Every time a user searches for certain information, list crawlers make sure that your web page is included in the search results.

5. Promote website content:

- In the vast world of online content, they make it easier for users to find and access your website. Leads to the promotion of your online content.

- This ultimately helps potential audiences engage, share, link, and amplify their reach for your content.

6. Provide an advantage over others:

- List crawlers help you outpace other rival websites when it comes to online searches through proper optimization of your website.

- They offer you a competitive edge over other ranking websites and attract more relevant users to your webpage.

Top 5 list crawlers for your website

-

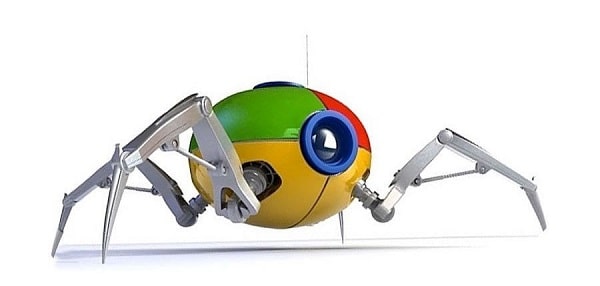

Google Bot

Googlebot Desktop and Googlebot Smartphone are both expected to crawl your site.

However, they share the same user agent token in robots.txt, preventing you from specifically targeting one over the other using robots.txt.

-

Bingbot

Bing offers a tool similar to Google’s called “Fetch as Bingbot” in Bing Webmaster Tools.

It enables you to request a page to be crawled and displayed in the same way Bingbot views it, revealing any discrepancies from your intended page appearance.

-

Slurp Bot

To be included in Yahoo Mobile Search results, websites should grant access to Yahoo Slurp.

-

Duck Duck Bot

DuckDuckGo compiles results from various sources, such as specialized Instant Answers, their proprietary crawler (DuckDuckBot), and community-driven platforms like Wikipedia.

Additionally, it incorporates standard search links from Yahoo! and Bing into its search results.

-

Apple Bot

Advantages of using list crawlers

- List crawlers systematize various pages, extract vital information, eliminate manual methods, and smooth data retrieval. They extract contact information, product listings, and much more.

- They improve data accuracy, abolish duplicates, ensure data consistency over error-prone manual methods, and are adept at precise extraction.

- From lead generation to competitive research, creating datasets for market analysis, and accumulating diverse data sources, web crawlers help users navigate websites efficiently.

- Data consistency maintenance, terminating error-prone manual methods, precise extraction of relevant data, data collection accuracy—you just ask, and the list crawler does it all for you.

- Faster decision-making, higher data analysis, completing repetitive tasks faster, and automating data collection They free individuals or organizations from these daunting tasks.

- Reduce human error and ensure data accuracy.

- Help businesses stay up-to-date by automating the process of gathering data on a regular basis.

Disadvantages of using list crawlers

- Sometimes list crawlers create inconsistent data structures, collect outdated information, and compromise data reliability.

- Websites with a dynamic nature and frequent updates pose a challenge to crawlers. These changing page structures or JavaScript-loaded content lead to hindrances in accurate data extraction.

- Regular maintenance is required for the web to adapt to evolving website structures and data formats.

- Sometimes, without any specific reason, they raise legal and ethical concerns over scrapping.

- With heavy reliance on the structure and organization of websites, list crawlers are vulnerable to changes in website layout.

- Measures like CAPTCHA, IP blocking, and user agent detection to deter web scraping are like obstacles for list crawlers and result in restricted data collection.

Facts and statistics

- A list crawler is essential software for creating search engine indexes, extracting specific details like email addresses or phone numbers, and collecting data about specific pages.

- For up-to-date business data and accurate market research, list crawlers are very useful. It is stated in a survey conducted by BrightLocal that 90% of marketers believe that list crawlers are very useful for marketing efforts.

- To determine the relevance of web crawlers in organizing and indexing online information, we can analyze Googlebot, a web crawling bot, processes over 20 billion web pages every day.

- For targeted information retrieval, crawlers can be customized.

- It is important that list crawlers adhere to website terms and legal regulations. Any kind of misuse may result in legal consequences.

Conclusion

From the above blog, it is evident that in this expanding digital landscape, the role of list crawlers is essential. As technology changes, crawlers are also advancing.

With the rise of millions of websites, online data collection and analysis will undoubtedly become even more competitive, shaping the way businesses and researchers act in this online world.

There is no doubt that list crawlers are aiding businesses and users with valuable insights and also helping them streamline data-related processes.

However, it is essential to use these tools responsibly and ethically, under the terms of the service of websites and adhering to legal regulations.

Know More

Successful Online Food Delivery Apps in the World

Top Locksmith Website Design Examples

Best Personal Trainer Website Designs Ideas/ Examples

Best Websites To Book Movie Tickets In USA

List Of Websites Built with WordPress